- Update pytorch_model.bin (5ab2ed873c59b186fbc95b22d36bba37076033ae) |

||

|---|---|---|

| .gitattributes | ||

| README.md | ||

| after.png | ||

| before.png | ||

| config.json | ||

| merges.txt | ||

| pyproject.toml | ||

| pytorch_model.bin | ||

| setup.cfg | ||

| special_tokens_map.json | ||

| tokenizer.json | ||

| tokenizer_config.json | ||

| vocab.json | ||

README.md

| language | license | pipeline_tag | tags | widget | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| en | cc-by-nc-sa-4.0 | document-question-answering |

|

|

LayoutLM for Invoices

This is a fine-tuned version of the multi-modal LayoutLM model for the task of question answering on invoices and other documents. It has been fine-tuned on a proprietary dataset of invoices as well as both SQuAD2.0 and DocVQA for general comprehension.

Non-consecutive tokens

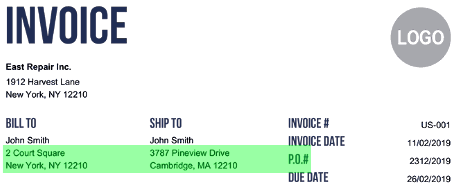

Unlike other QA models, which can only extract consecutive tokens (because they predict the start and end of a sequence), this model can predict longer-range, non-consecutive sequences with an additional classifier head. For example, QA models often encounter this failure mode:

Before

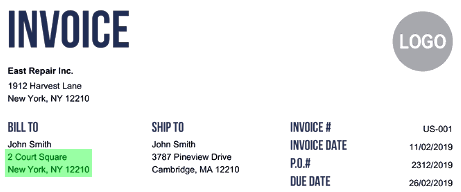

After

However this model is able to predict non-consecutive tokens and therefore the address correctly:

Getting started with the model

The best way to use this model is via DocQuery.

About us

This model was created by the team at Impira.