- Add evaluation results on the default config of sst2 (b5d312631216610c427dbde4a82edfabd1a5f962) Co-authored-by: Evaluation Bot <autoevaluator@users.noreply.huggingface.co> |

||

|---|---|---|

| .gitattributes | ||

| README.md | ||

| config.json | ||

| map.jpeg | ||

| pytorch_model.bin | ||

| rust_model.ot | ||

| tf_model.h5 | ||

| tokenizer_config.json | ||

| vocab.txt | ||

README.md

| language | license | datasets | model-index | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| en | apache-2.0 |

|

|

DistilBERT base uncased finetuned SST-2

Table of Contents

Model Details

Model Description: This model is a fine-tune checkpoint of DistilBERT-base-uncased, fine-tuned on SST-2. This model reaches an accuracy of 91.3 on the dev set (for comparison, Bert bert-base-uncased version reaches an accuracy of 92.7).

- Developed by: Hugging Face

- Model Type: Text Classification

- Language(s): English

- License: Apache-2.0

- Parent Model: For more details about DistilBERT, we encourage users to check out this model card.

- Resources for more information:

How to Get Started With the Model

Example of single-label classification:

import torch

from transformers import DistilBertTokenizer, DistilBertForSequenceClassification

tokenizer = DistilBertTokenizer.from_pretrained("distilbert-base-uncased")

model = DistilBertForSequenceClassification.from_pretrained("distilbert-base-uncased")

inputs = tokenizer("Hello, my dog is cute", return_tensors="pt")

with torch.no_grad():

logits = model(**inputs).logits

predicted_class_id = logits.argmax().item()

model.config.id2label[predicted_class_id]

Uses

Direct Use

This model can be used for topic classification. You can use the raw model for either masked language modeling or next sentence prediction, but it's mostly intended to be fine-tuned on a downstream task. See the model hub to look for fine-tuned versions on a task that interests you.

Misuse and Out-of-scope Use

The model should not be used to intentionally create hostile or alienating environments for people. In addition, the model was not trained to be factual or true representations of people or events, and therefore using the model to generate such content is out-of-scope for the abilities of this model.

Risks, Limitations and Biases

Based on a few experimentations, we observed that this model could produce biased predictions that target underrepresented populations.

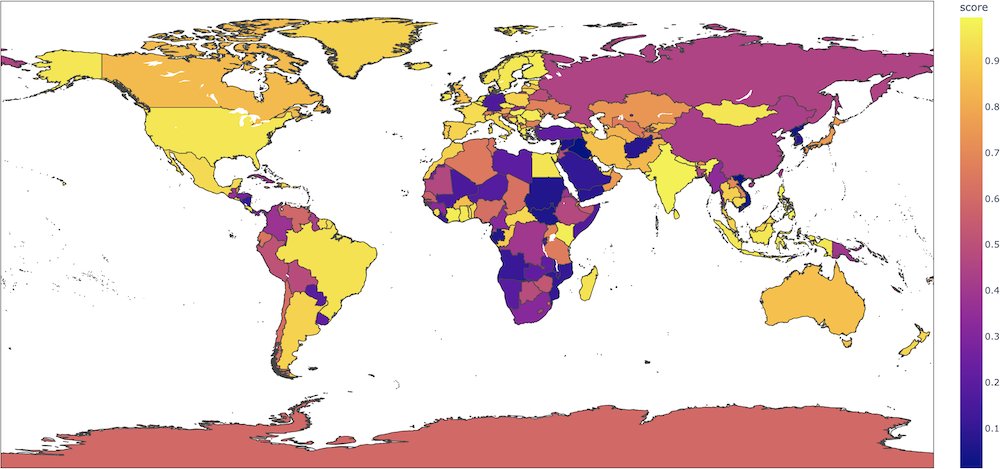

For instance, for sentences like This film was filmed in COUNTRY, this binary classification model will give radically different probabilities for the positive label depending on the country (0.89 if the country is France, but 0.08 if the country is Afghanistan) when nothing in the input indicates such a strong semantic shift. In this colab, Aurélien Géron made an interesting map plotting these probabilities for each country.

We strongly advise users to thoroughly probe these aspects on their use-cases in order to evaluate the risks of this model. We recommend looking at the following bias evaluation datasets as a place to start: WinoBias, WinoGender, Stereoset.

Training

Training Data

The authors use the following Stanford Sentiment Treebank(sst2) corpora for the model.

Training Procedure

Fine-tuning hyper-parameters

- learning_rate = 1e-5

- batch_size = 32

- warmup = 600

- max_seq_length = 128

- num_train_epochs = 3.0